Tensorflow Syntax

Session

# Method 1

sess = tf.Session()

result = sess.run(dot_operation)

sess.close()

# Method 2

with tf.Session() as sess:

result = sess.run(dot_operation)

# Run multiple ops

z1_value, z2_value = sess.run([z1, z2])

1

2

3

4

5

6

7

8

9

10

2

3

4

5

6

7

8

9

10

Placeholder

x1 = tf.placeholder(dtype=tf.float32, shape=None)

y1 = tf.placeholder(dtype=tf.float32, shape=None)

z1 = x1 + y1

x2 = tf.placeholder(dtype=tf.float32, shape=[2, 1])

y2 = tf.placeholder(dtype=tf.float32, shape=[1, 2])

z2 = tf.matmul(x2, y2)

1

2

3

4

5

6

2

3

4

5

6

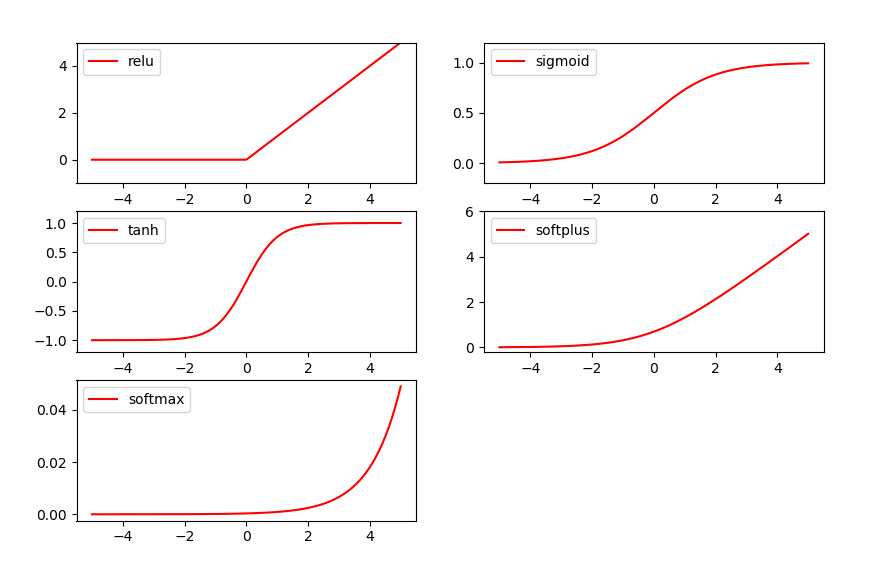

Activation

x = np.linspace(-5, 5, 200) # x data, shape=(100, 1)

y_relu = tf.nn.relu(x)

y_sigmoid = tf.nn.sigmoid(x)

y_tanh = tf.nn.tanh(x)

y_softplus = tf.nn.softplus(x)

y_softmax = tf.nn.softmax(x)

y_relu, y_sigmoid, y_tanh, y_softplus, y_softmax = sess.run(

[y_relu, y_sigmoid, y_tanh, y_softplus, y_softmax])

1

2

3

4

5

6

7

8

2

3

4

5

6

7

8

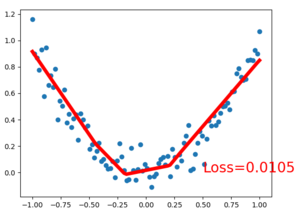

Simple Regression

tf_x = tf.placeholder(tf.float32, x.shape) # input x

tf_y = tf.placeholder(tf.float32, y.shape) # input y

# one hidden fully connected layer

l1 = tf.layers.dense(tf_x, 10, tf.nn.relu) # hidden layer

output = tf.layers.dense(l1, 1) # output layer => output one float number

loss = tf.losses.mean_squared_error(tf_y, output) # compute loss

optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.5)

train_op = optimizer.minimize(loss)

sess = tf.Session() # control training and others

sess.run(tf.global_variables_initializer()) # initialize var in graph

for step in range(100):

# train and net output

_, l, pred = sess.run([train_op, loss, output], {tf_x: x, tf_y: y})

# plot and show learning process

if step % 5 == 0:

plt.cla() # clear current axis (clear previous drawn line)

plt.scatter(x, y)

plt.plot(x, pred, 'r-', lw=5)

plt.text(0.5, 0, 'Loss=%.4f' % l, fontdict={'size': 20, 'color': 'red'})

plt.pause(0.1)

plt.show()

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

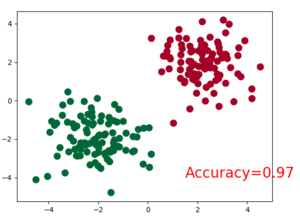

Simple Classification

tf_x = tf.placeholder(tf.float32, x.shape) # input x

tf_y = tf.placeholder(tf.int32, y.shape) # input y

# neural network layers

l1 = tf.layers.dense(tf_x, 10, tf.nn.relu) # hidden layer

output = tf.layers.dense(l1, 2) # output layer

# Use cross entropy to compute loss

loss = tf.losses.sparse_softmax_cross_entropy(labels=tf_y, logits=output)

optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.05)

train_op = optimizer.minimize(loss)

# Use accuracy to measure performance

# return (acc, update_op), and creates 2 local variables

accuracy = tf.metrics.accuracy(

labels=tf.squeeze(tf_y), predictions=tf.argmax(output, axis=1),)[1]

# control training and others

sess = tf.Session()

init_op = tf.group(tf.global_variables_initializer(),

tf.local_variables_initializer())

sess.run(init_op)

for step in range(100):

# train and net output

_, acc, pred = sess.run([train_op, accuracy, output], {tf_x: x, tf_y: y})

# plot and show learning process

if step % 5 == 0:

plt.cla()

plt.scatter(x[:, 0], x[:, 1], c=pred.argmax(1), s=100, lw=0, cmap='RdYlGn')

plt.text(1.5, -4, 'Accuracy=%.2f' % acc, fontdict={'size': 20, 'color': 'red'})

plt.pause(0.1)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

softmax_cross_entropy vs. sparse_softmax_cross_entropy

softmax_cross_entropy | sparse_softmax_cross_entropy | |

|---|---|---|

| Label Type | int32 / int64 | float32 / float64 |

| Label Shape | (batch_size, n_classes) | (batch_size) |

| Label Form | Any Integer | One-hot Encoding |

Save / Load Model

# SAVE

saver = tf.train.Saver()

sess = tf.Session()

# sess.run ...

# write_meta_graph is not recommended (can be very large)

saver.save(sess, './<dir>/<file_prefix>', write_meta_graph=False)

# LOAD

saver = tf.train.Saver()

saver.restore(sess, './<dir>/<file_prefix>')

# sess.run ...

sess.run('w1:0') # run specific operation

w1 = graph.get_tensor_by_name('w1:0') # get original tensor/operation

1

2

3

4

5

6

7

8

9

10

11

12

13

14

2

3

4

5

6

7

8

9

10

11

12

13

14

Tensorboard

Run tensorboard with tensorboard --logdir path/to/logdir

tf.summary.histogram('pred', output)

tf.summary.scalar('loss', loss) # add loss to scalar summary

merge_op = tf.summary.merge_all()

writer = tf.summary.FileWriter('./log', sess.graph) # write to file

for step in range(100):

# train and net output

_, result = sess.run([train_op, merge_op], {tf_x: x, tf_y: y})

writer.add_summary(result, step)

1

2

3

4

5

6

7

8

9

10

2

3

4

5

6

7

8

9

10

Dataset

tfx = tf.placeholder(npx_train.dtype, npx_train.shape)

tfy = tf.placeholder(npy_train.dtype, npy_train.shape)

dataset = tf.data.Dataset.from_tensor_slices((tfx, tfy))

dataset = dataset.shuffle(buffer_size=1000)

dataset = dataset.batch(32)

dataset = dataset.repeat(3) # repeat epochs, if not specified, default is 1 epoch

iterator = dataset.make_initializable_iterator()

bx, by = iterator.get_next() # returns get_next operation

l1 = tf.layers.dense(bx, 10, tf.nn.relu)

out = tf.layers.dense(l1, npy.shape[1])

loss = tf.losses.mean_squared_error(by, out)

train = tf.train.GradientDescentOptimizer(0.1).minimize(loss)

sess = tf.Session()

sess.run([iterator.initializer, tf.global_variables_initializer()],

feed_dict={tfx: npx_train, tfy: npy_train})

N = 200

# Iterate times: min(N, num_train / batch_size * epochs)

for step in range(N):

try:

# data is embedded into `train`

_, trainl = sess.run([train, loss])

if step % 10 == 0:

# run loss op on test set

testl = sess.run(loss, {bx: npx_test, by: npy_test})

# If N > num_train / batch_size * epochs, this exception will be raised

except tf.errors.OutOfRangeError:

print('Finished the last epoch.')

break

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32