Learn Dialogflow

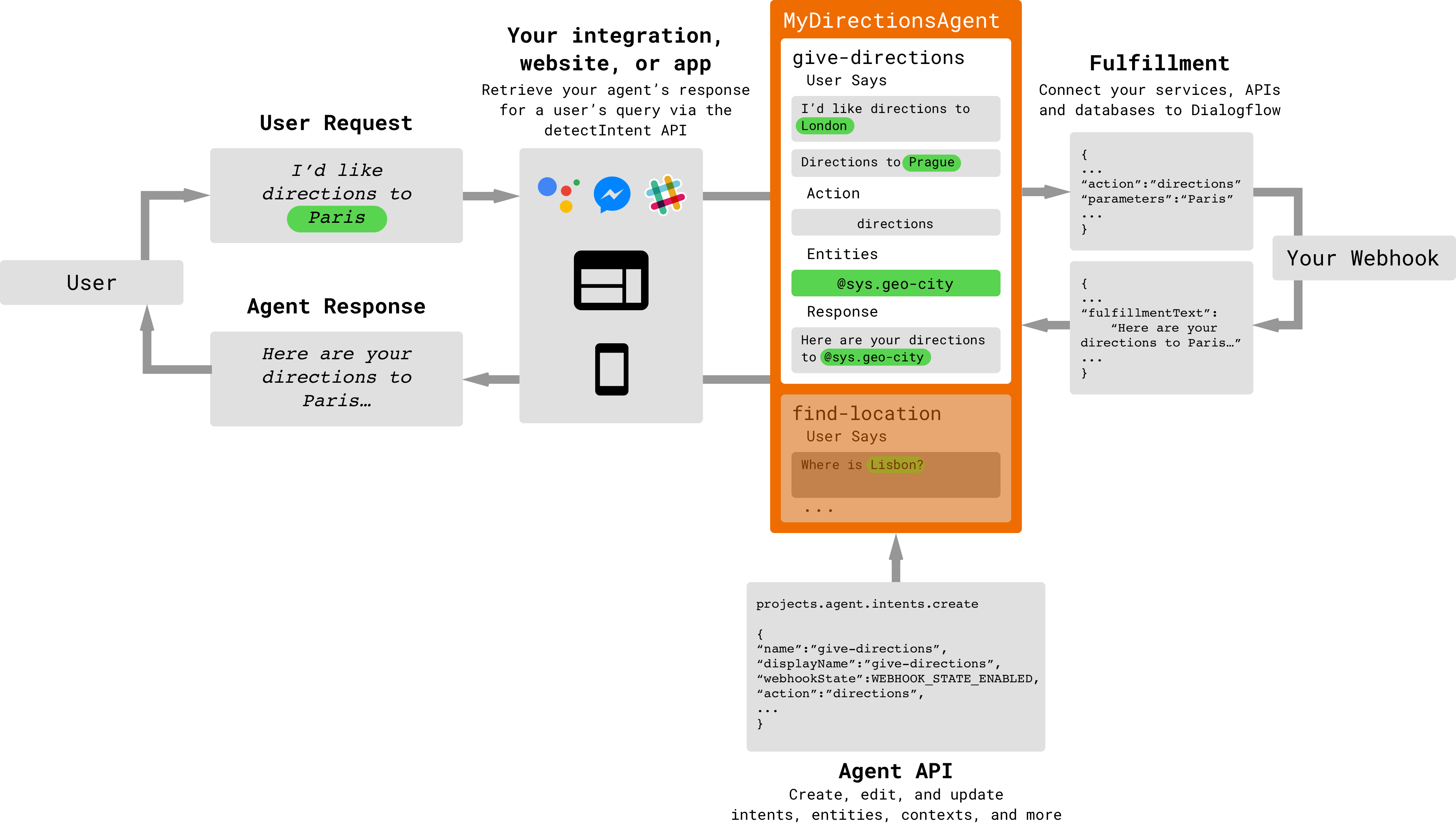

Dialogflow (formerly Api.ai, a company acquired by Google in 2016) provides easy-to-use web UI for developers to create agents to handle the NLP (natural language processing) part of chatbot applications. Although its NLP ability is very limited, it is still better than most of the products on the market. Depending on requirements of your chatbot, building an agent being capable of parsing flexible user inputs still require decent amount of human effort.

An agent consists of two main parts: Intents and Entities. Intents handle how user inputs should be parsed and how the dialog should proceed. Entities are items that users can add synonyms to words that intents should match, so entities are used in Intents after defined.

Entities

The entities used in a particular agent will depend on the parameter values that are expected to be returned as a result of the agent functioning. In other words, a developer does not need to create entities for every possible concept mentioned in the agent – only for those needed for actionable data.

There are 3 types of entities: system (defined by Dialogflow), developer (defined by a developer), and user (built for each individual end-user in every request) entities. Each of these can be classified as mapping (having reference values), enum (having no reference values), or composite (containing other entities with aliases and returning object type values) entities.

Enum

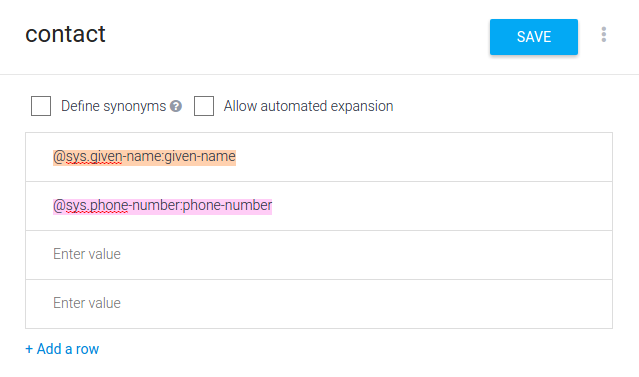

Enum type entities contain a set of entries that do not have mappings to reference values. Entries can contain simple words or phrases, or other entities. This can be used to match a set of parameters at once, kind of like a wrapper.

To create an enum type entity, uncheck the Define Synonyms option and add entries.

given-name and phone-number are wrapped in a contact enum

Extend System Entities with Enum

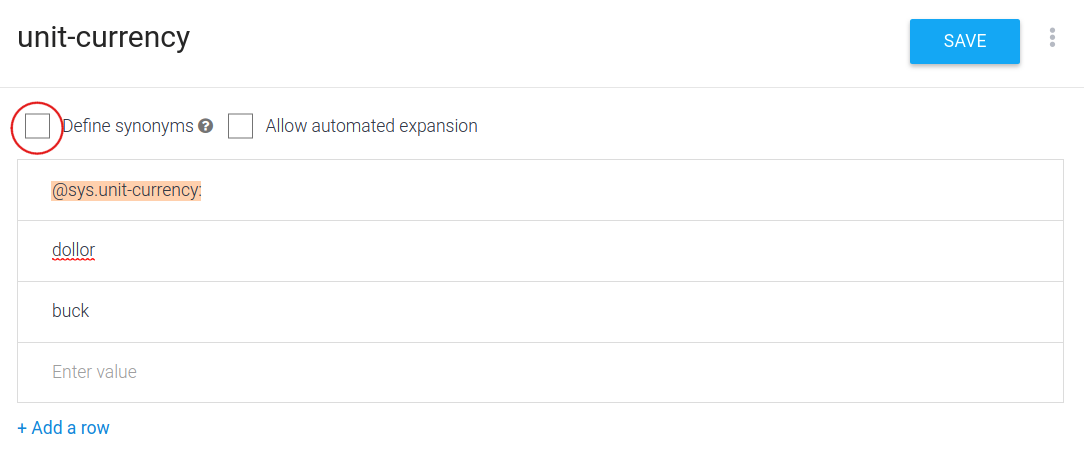

Another useful tip is to use enum to extend system or any existing entity.

System entities contain many useful entities that can save developers a lot of time, however, some entities are not as comprehensive as you expect or not as flexible in matching patterns as you wish. The good news is you can extend system entities by creating your own entities!

For example, users may miss type dollar as dollor, but @sys.unit-currency doesn't match dollor, you can add dollor as an entry in an enum type entity.

Composite

When an enum type entity contains other entities and they are used with aliases, we call it a composite entity. Composite entities are most useful for describing objects or concepts that can have several different attributes.

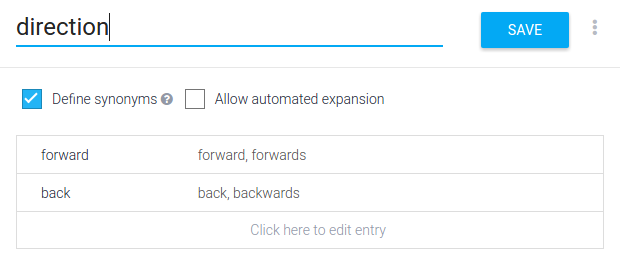

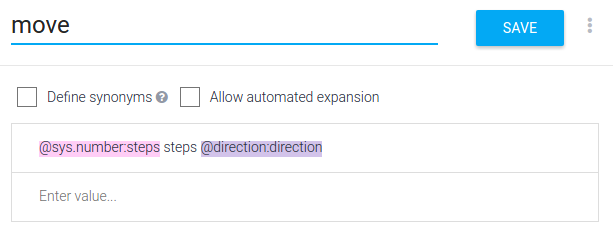

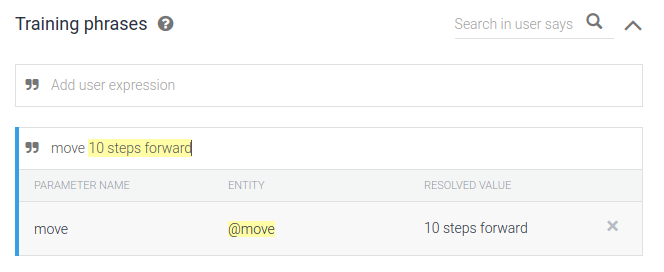

For example, a robot's movement can have two characteristics: direction and number of steps. In order to describe all possible combinations, you first need an entity that captures the direction.

Then you create a second entity that refers to the direction and the number of steps. Make sure to uncheck Define Synonyms.

By providing the example "Move 10 steps forward", the @move entity is annotated automatically.

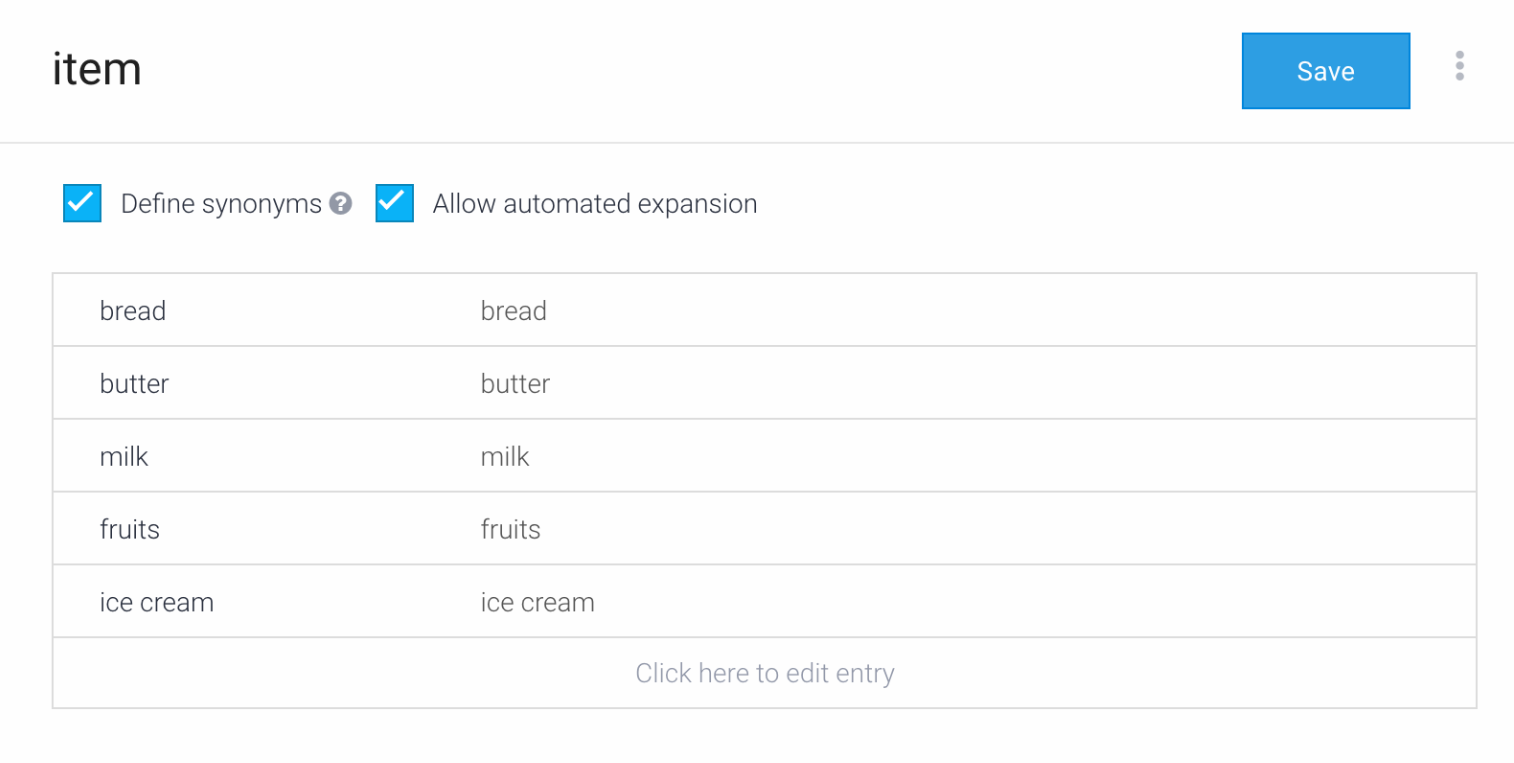

Allow Automated Expansion

This feature of developer mapping entities allows an agent to recognize values that have not been explicitly listed in the entity.

For example, consider a shopping list with items to buy:

If a user says "I need to buy some vegetables", "vegetables" will be picked up as a value, even though it's not included in the @item entity. With Automated Expansion enabled, the NLU sees the user's query is similar to the Training Phrases provided in the intent and can pick out what should be extracted as a new value.

Intents

Intent is the core of Dialogflow, it provides flexible and robust dialog design tool for its users, so it can be a little overwhelming at first if you have no prior experience on building natural language based applications. Intents contain six parts:

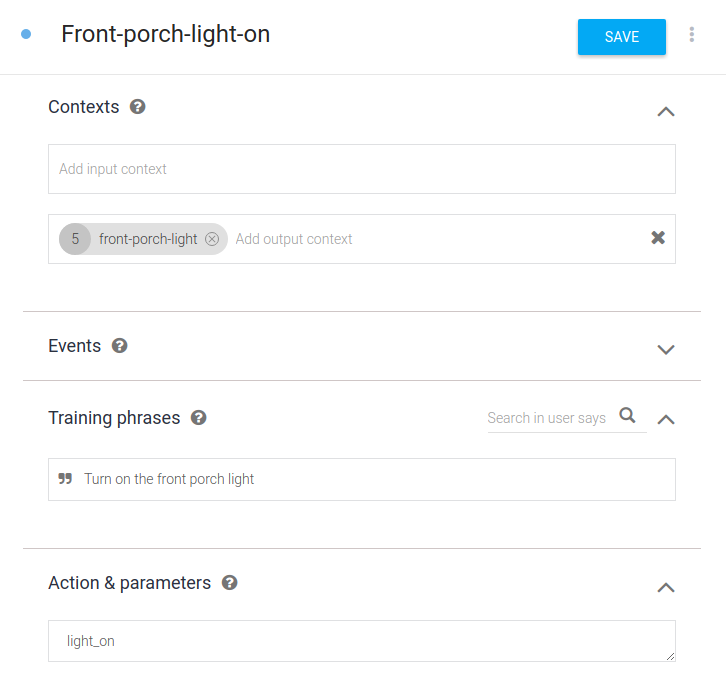

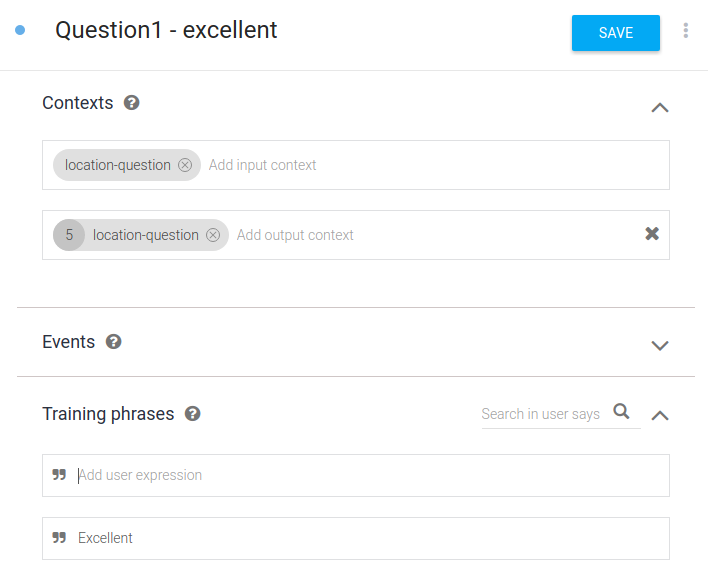

Context

Designed for passing on information from previous conversations or external sources (e.g., user profile, device information, etc). Also, they can be used to manage conversation flow. It represents the current context of a user's request. This is helpful for differentiating phrases which may be vague or have different meanings depending on the user’s preferences, geographic location, the current page in an app, or the topic of conversation.

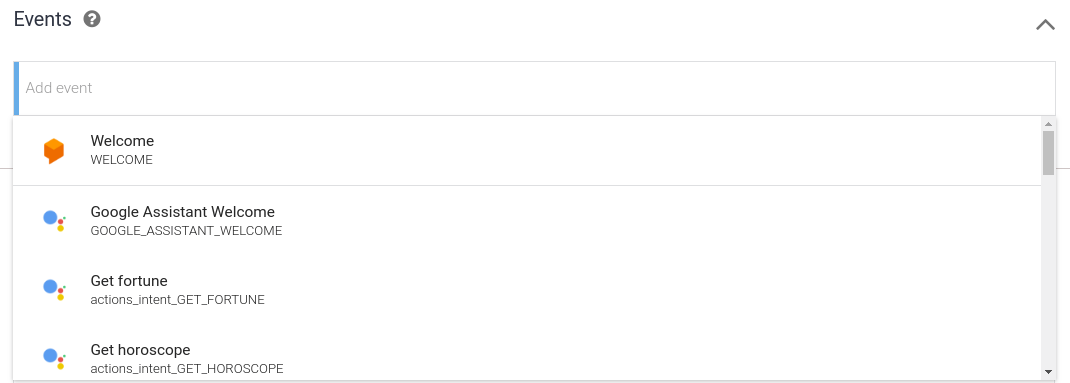

Event

Events is a feature that allows you to invoke intents by an event name instead of a user query. You can define an event name in an intent in the developer console or via the API with the help of the /intents endpoint. Custom events can be triggered by sending GET or POST http requests, for details see here.

Training Phrases

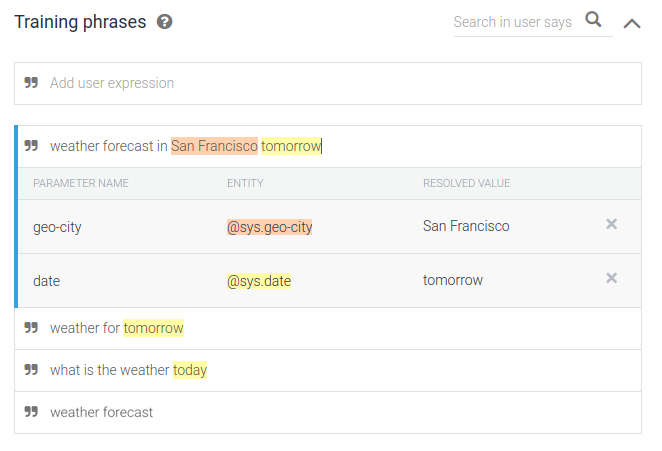

Training phrases is a list of possible user inputs (words or sentences) that you expect users would say to your bot to trigger this intent. Unlike many other chatbot design tools, this list doesn't have to be exhaustive, Dialogflow will handle some common phrase variations for you, but still, more comprehensive the training phrases are, more robust your chatbot is.

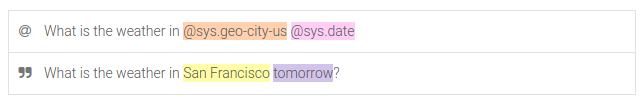

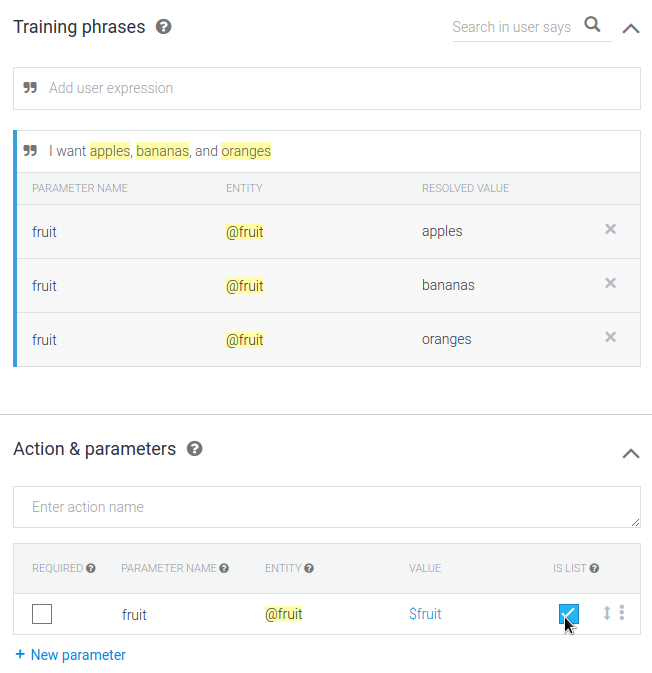

Training phrases can be annotated to tell Dialogflow which information (parameters) you want to extract. Annotating phrases means to specify which parameters you want to match and which entities they are belong to. Parameters may be annotated automatically if they are already defined in Actions & parameters. Entities can be system predefined or custom ones.

(Click " icon in the front to change it to @)

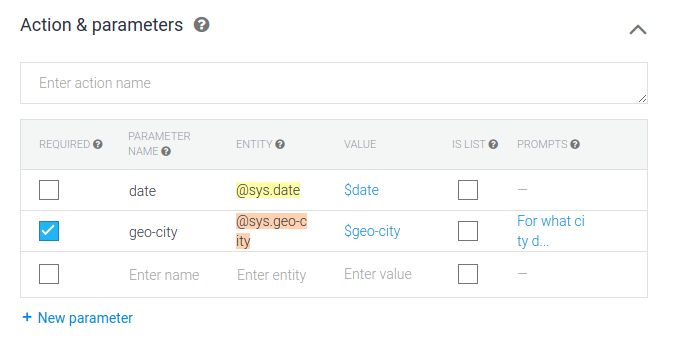

Actions and Parameters

An action corresponds to the step your application will take when a specific intent has been triggered by a user’s input. Actions can have parameters for extracting information from user requests.

If user's input doesn't contain any of required parameters, bot will ask user specifically with sentences defined in "prompts".

The "Is list" option is appropriate for situations where a user’s input contains an enumeration, like “I would like apples, bananas, and oranges.” More on defining list values.

Extract Original Parameter Values

You may want to extract the original value from the user’s input to give a grammatically correct response. To define a parameter that uses original input, click on the value in the “Action” table to see the $parameter_value.original option.

From Composite Entities

By default, composite entities return object-type values. In order to extract string values from "sub-entities", you will need to manually define parameters with the following format:

$composite_entity_parameter_name.subentity_aliasFrom Contexts

To refer to a parameter value contained within an active context, you will need to manually define parameters with the following format:

#context_name.parameter_name

To reference parameter values, use $, and to reference context values, use #

Responses

Responses are the information Diaflogflow respond to users after gathering all the required parameters. Dialogflow provides these types of responses: (for detailed description on each platform see here.)

Text

TIP

To add a new line in the UI, press Shift+Enter. If you create intents via the API, use

\nin the intent object.Image

Card

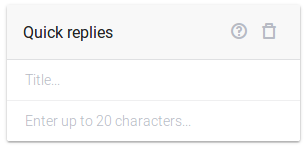

Quick replies

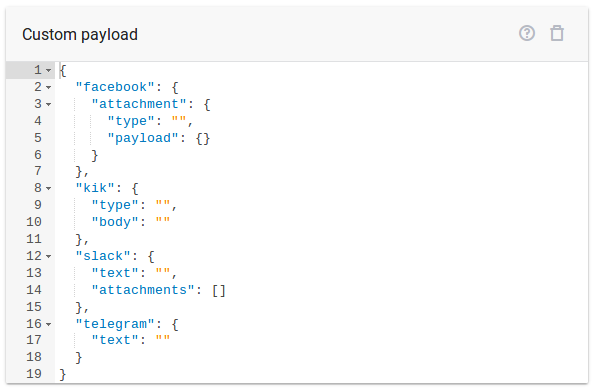

Custom payload

Fulfillment

Fulfillment provides a way for developers to get information extracted by Dialogflow. After an intent is fulfilled, Dialogflow sents a POST request to your endpoint and pass JSON formatted data to your server so you can handle user's requests more dynamically.

Dialogflow currently only provides Node.js Fulfillment Library, but you can do this in whatever language you like, here are python's fulfillment sample: weather reporter and translator.

Control Machine Learning Involvement

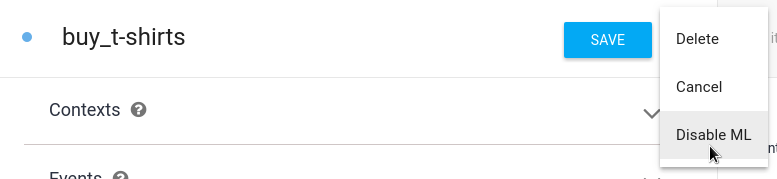

Machine Learning On/Off

You can turn off machine learning for individual intents by clicking on the options icon more_vert in the upper right hand corner and choose Disable ML.

When Machine learning is off, the intent will be triggered only if there is an exact match between the user’s input and one of the examples provided in the Training Phrases section of the intent.

To configure the setting when creating intents via /intents endpoint, use "auto": true for enabling machine learning or "auto": false for disabling machine learning in the intent object.

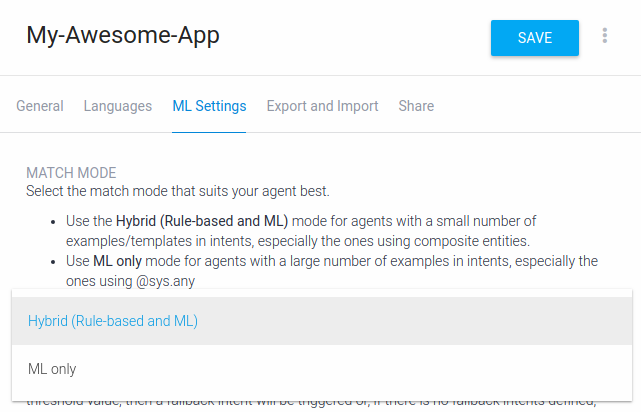

Match Mode

Match Mode is used for matching user requests to specific intents and uses entities to extract relevant data from them. To do this, click on the gear icon settings for your agent, then ML Settings and choose one of the following options from the drop down menu, under Match Mode.

Hybrid (Rule-based and ML) - Is best for agents with a small number of examples in intents and/or wide use of templates syntax and composite entities.

ML only - Can be used for agents with a large number of examples in intents, especially the ones using

@sys.anyor very large developer entities.

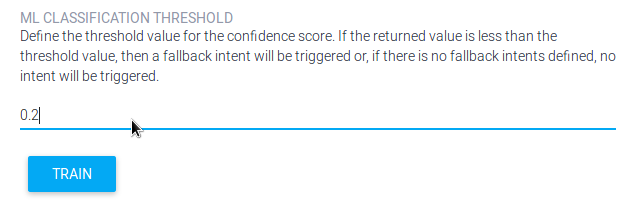

ML Classification Threshold

To filter out false positive results and still get variety in matched natural language inputs for your agent, you can tune the machine learning classification threshold. If the returned "score" value in the JSON response to a query is less than the threshold value, then a fallback intent will be triggered or, if there are no fallback intents defined, no intent will be triggered.

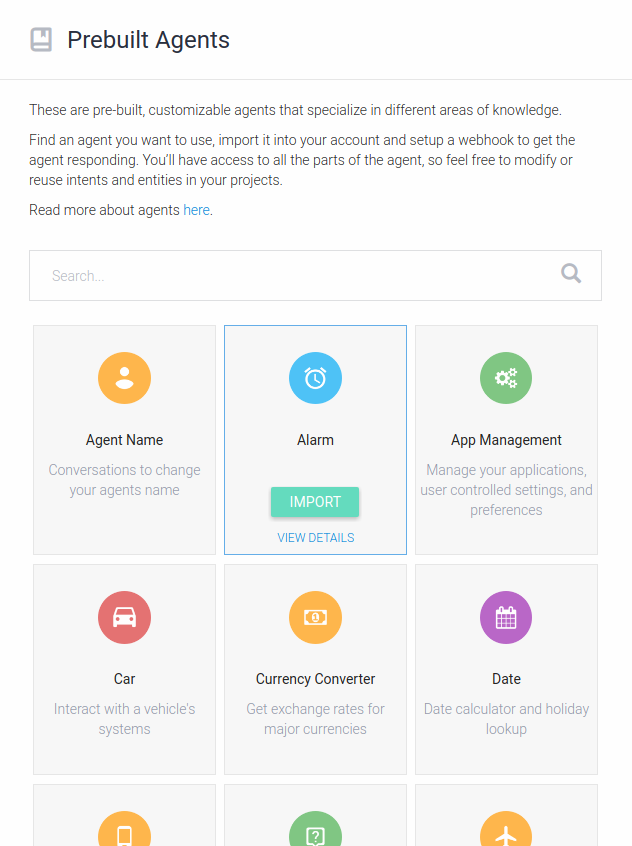

Pre-built Agents

Dialogflow provides several pre-built agents for its users to quickly spin up a relevant bot, but most of them are not comprehensive and has no built-in responses, so it's not for ten-minutes-to-production.

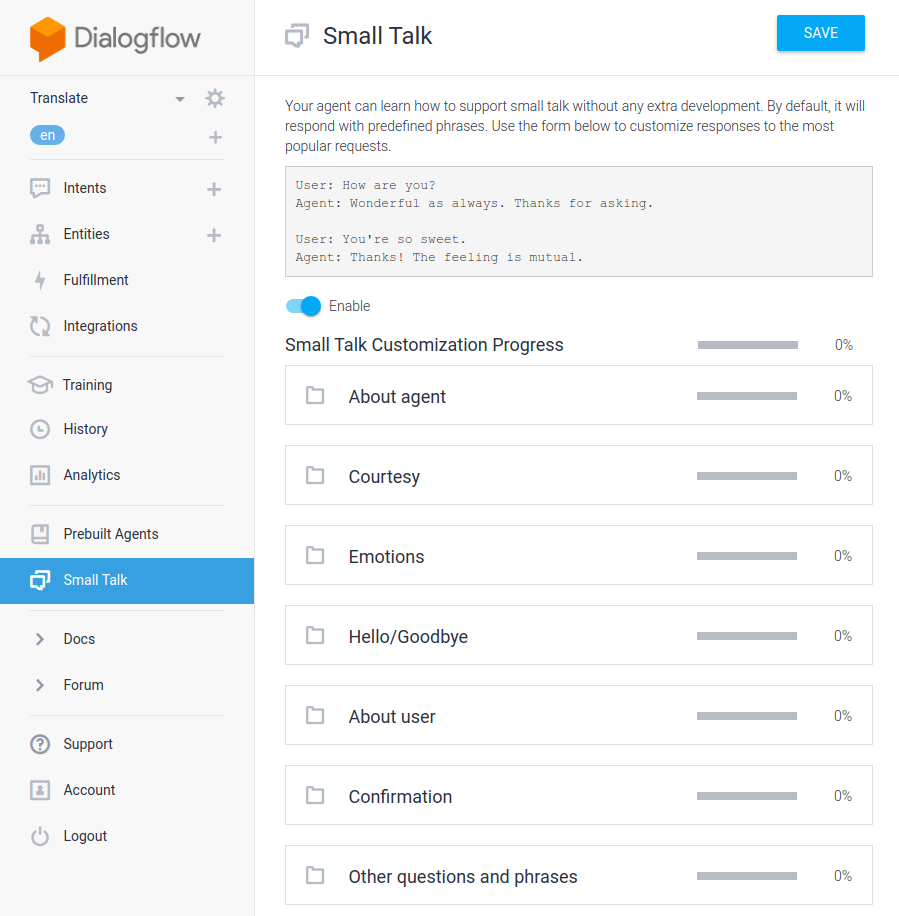

Small Talks

Dialogflow includes an optional feature called Small Talk, which is used to provide responses to casual conversation. This feature can greatly improve a user's experience when talking to your agent.

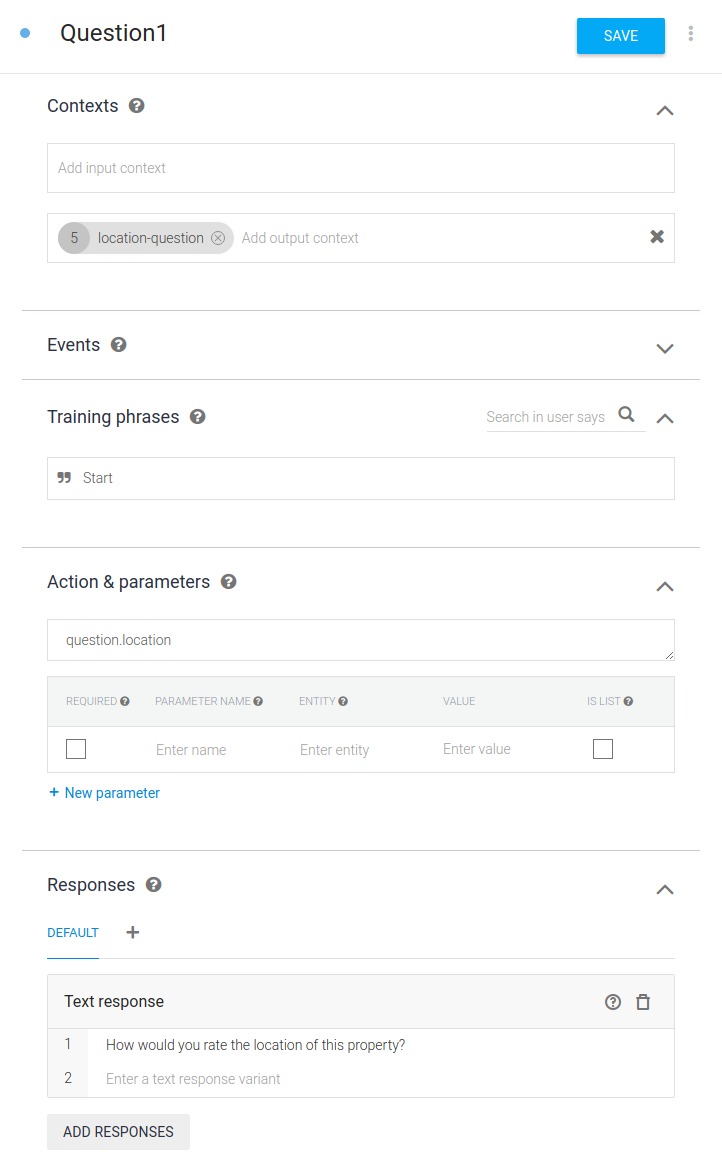

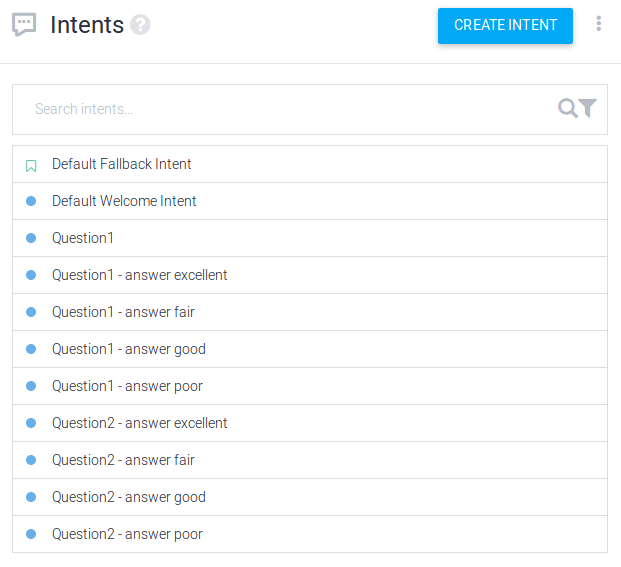

Build Non-Linear Dialog

Non-linear dialog or conditional dialog is a dialog with branches. Base on user's response, chatbot will choose different conversations. In Dialogflow, a branch is an intent, and if-else condition is automatically triggered base on training phrases of other intents that use a pre-defined context as input context.

First, set and output context for the entry intent:

And then use the context as an input context:

Here we use Excellent as a training phrase, so if user's response of the first bot response is Excellent, this intent will be triggered. The branches can grow to a large number of intents.

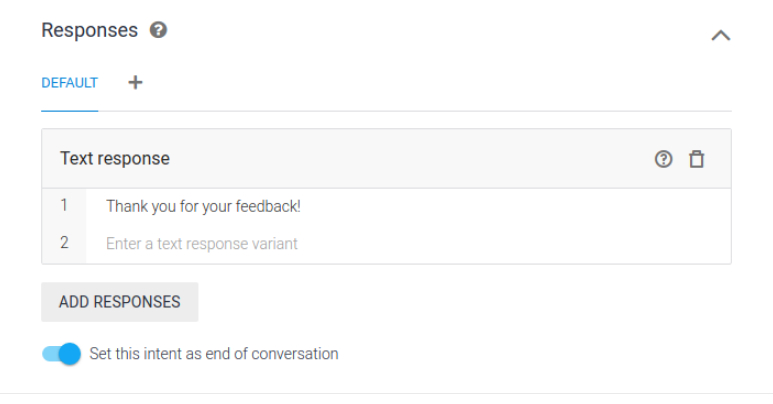

Finally, remember to enable Set this intent as end of conversation.

WARNING

For agents with more than 50 entities or more than 600 intents, you need to update the algorithm manually. To do so, click on the gear icon settings for your agent, then ML Settings and click the Train button.